SPEECH ANALYTICS THROUGH DEEP LEARNING NEURAL NETWORKS

At VoiceBase we believe speech analytics is a game of“what is most likely to have been said here.” Various companies have different strategies on how to answer that question with the highest degree of accuracy. Most of the Natural Language Processing (NLP) solutions developed in the last few years, have some form of a machine learning based approach to achieve artificial intelligence. However, many of those methods have been stuck in the search & optimization era, where they mine lots of data for simple queries, like matching phonetic patterns to achieve transcription, or simply searching for singular words. This approach is functional for a few very specific use cases, but in order to create a speech analytics solution that serves any industry and returns the actual answers to the questions companies have, the solution needs to do more than provide a transcript archive for the customer to search. It needs to understand context, sentence structure and how to mine data for complex events that take place over a conversation. In order to accomplish this, industry leading companies like VoiceBase, Google and Amazon use deep learning neural networks, which are on the bleeding edge of machine learning.

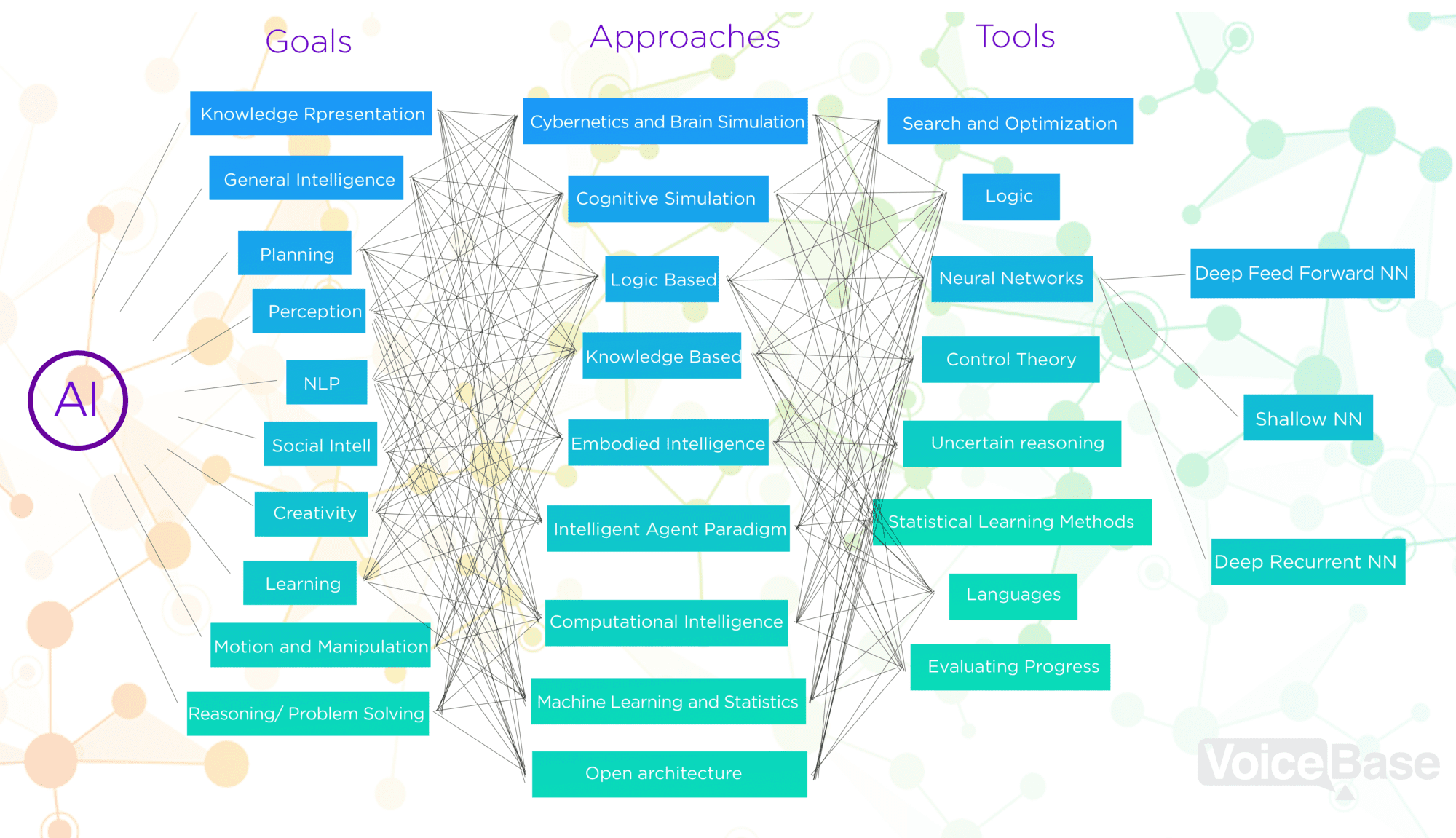

I’ve found that the more an industry uses the terms artificial intelligence, machine learning and deep learning, the more confusing each term can be. What is fluff and what is fact? Some people seem to think these words are synonymous with each other, while others think deep learning is just a fancier term for machine learning. When in reality they are all related, while all having their own definitions and actually being quite different. Although they are all great buzzwords for Marketing and Product teams to throw around, it is important to understand the differences. So I thought I’d do a deep-dive into what we at VoiceBase, an Artificial Intelligence company, define as artificial intelligence, machine learning and deep learning as applied to our speech analytics API solution.

THE TRANSFORMATION OF ARTIFICIAL INTELLIGENCE

Artificial Intelligence (AI) is not a new buzzword. The concept of computer systems being able to perform tasks that normally require human intelligence is far from new. AI has been around since the 1600s when the first calculator was developed to do calculations on concepts, like multiplication and division, rather than counting numbers to produce an answer. Throughout history, other early investments in AI were also primarily mathematically driven, such as Alan Turing’s use of the theory of computation to theorize the Turing Machine. For a long time these investments were based on methods driven by Pattern Matching, Logic, and Statistical Learning. It wasn’t until further advancements were made in technology and science in the 1980s, 90s, and 2000s; like the wide availability of GPUs that make parallel processing better, faster, stronger (and cheaper), the infinite storage and flood of every flavor of data (Big Data, Big Voice), and further R&D of recurrent neural networks, that methods driving AI have now become primarily machine learning based.

Machine Learning Algorithms

The idea for machine learning was born when scientists came to the realization that, rather than teaching computers everything they need to know about the world and how to carry out tasks, it might be possible to teach them to learn for themselves. Next the question became, what was the best way to teach a computer to learn?

Deep Learning Neural Networks

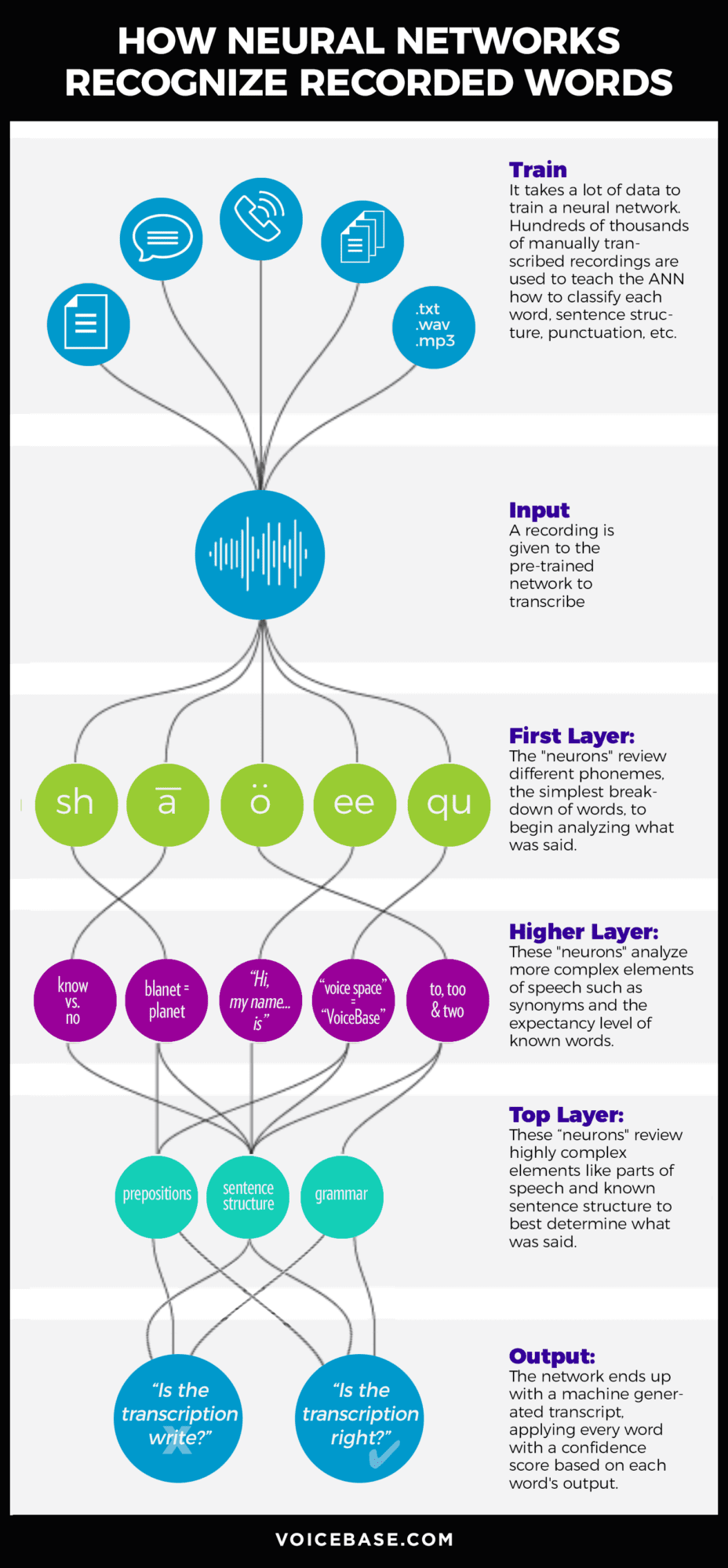

Deep learning is a structural organization applied to artificial neural networks (ANNs), resulting in ANNs that contain more than one hidden layer. Artificial Neural Networks are a more recently developed concept, and designed to mimic the biology of human brain function. Each neural network has an input and an output layer. The input layer consumes the data that is needed to predict, while the output layer generates the predicted results. The hidden layers are the layers between input and output – deep learning neural networks typically contain many hidden layers which provides a neural network with the ability to learn more complex tasks.

Within these artificial neural networks exist individual “neurons” or information nodes, where very specific variables of the data set are reviewed. After this review each station in a neural network assigns a weighting to its output; how correct or incorrect it is relative to the data review requirement.

This weighting system ends up resulting in a single weighted result that is included in the probability of the overall task of the entire neural network. Basically, a highly educated guess.

Imagine you’re in a conversation with someone and they mumble something you don’t quite hear; what goes through your mind as you determine what they said?

• “Hmm they mumbled, did they not want me to hear it? Was it rude?”

• “We were just talking about that appointment I had to cancel, was it related to that?”

• “What did they say right before the mumble?”

• “What is happening right now? Did they keep talking and brush over it?”

• “Are they waiting for my response? Did the conversation end?…”

This is an example of the type of thinking and reasoning that we try to structure our deep learning neural network to go through.

Neural networks are typically used as solutions to problems which cannot be solved with complete logical certainty, and where an approximate solution is often sufficient, like “what is most likely to have been said here?”

THE FUTURE OF ARTIFICIAL NEURAL NETWORKS

At VoiceBase we extensively use deep learning neural networks, especially in our speech recognition engine – we use them not only for acoustic models but also for language models.

The future of neural networks looks very interesting from where we sit, as there are a range of developments happening that have the potential to provide dramatic improvements to the learning process. First, there are generative adversarial networks – without going into the details, this is basically a setup where two different neural networks are played against each other… one trying to create fake training data, while the other tries to detect what is real data and what is fake generated data, with the results being fed back into both networks to improve. The result is that these two neural networks can more or less train themselves. These type of networks have proven to be very powerful in image recognition, and image recognition is often a precursor to speech recognition so we expect to see these types of networks be utilized in speech rec in the future.

Another development we see in AI is breaking a limitation of neural networks. Right now everyone is forced to use gigantic sized neural networks with tens and hundreds of thousands of neurons (and an insane amount of synapses). Those gigantic networks take weeks to train and take gigantic computing resources. One focus of research has been on finding ways to train small neural networks and finding ways to combine them with no or minimal loss. This is one of the areas VoiceBase is doing some research and hopes to utilize this type of forward thinking in the future.

Lastly, another drawback of neural networks is in how a neural network learns, and that the correlations that are discovered remain mostly in a black box. Another focus of our research is looking at ways to extract knowledge from this area of neural networks to learn and further improve the fields that we’re applying our neural networks to.

About VoiceBase

Want to leverage VoiceBase’s deep learning neural network powered speech analytics?